The Positronic Shift

Gemini 3.0 and the Emergence of System 2

If you have been following the trajectory of Large Language Models (LLMs) for the last few years, you are likely familiar with the sensation of watching a very fast, very well-read librarian retrieve information. You ask for a fact, and the system pulls a book from the shelf. The speed is dazzling, but the process is fundamentally retrieval and recombination.

But today, November 18, 2025, marks a phase transition. With the official release of Gemini 3.0 and its accompanying developer platform, Google Antigravity, we are no longer just asking the librarian to fetch a book; we are watching them sit down, open a notebook, draft a plan, critique that plan, and verify their sources before they even speak to us.

In the language of General Systems Theory (GST), we have moved from a linear Input —> Output process to a recursive Input —> Feedback Loop —> Output system. Or, to put it in terms my fellow sci-fi enthusiasts will appreciate: we have officially entered the Positronic era.

From Reflex to Reflection (System 1 vs. System 2)

For decades, we have operated under the assumption that standard computing is linear. In our lab’s Energy —> Material —> Information hierarchy, standard software has always been a direct manipulation of Information. Even the generative AI explosion of 2023–2024 was largely “reflexive”—what Nobel laureate Daniel Kahneman would call System 1 thinking: fast, automatic, frequent, emotional, stereotypic, and subconscious.

Previous models operated on probabilistic token prediction. They were essentially playing a high-stakes game of “complete the sentence.” If you asked a complex physics question, the model didn’t “solve” it; it predicted what a solution looked like based on its training data.

Gemini 3.0 Pro formalizes a distinct architecture that enables System 2 thinking: slow, effortful, infrequent, logical, calculating, and conscious.

The Hidden Loop

When you engage the new “Deep Think” mode, you aren’t just getting a token prediction. You are triggering a recursive feedback loop internal to the model. It generates “thought tokens”—hidden intermediate steps that the user never sees—which serve as a scratchpad.

Decomposition: The model breaks the prompt into subsystems (e.g., “Isolate the Python logic,” “Check the Debian dependency,” “Verify the Arduino baud rate”).

Planning: It structures the Information flow.

Critique (Negative Feedback): This is the critical GST component. The model looks at its own plan and asks, “Does this actually solve the user’s constraint?” If no, it iterates.

Convergence: Only when the internal confidence threshold is met does it generate the final output.

It is the difference between Commander Data tapping his console for an immediate answer and him tilting his head, pausing for three seconds, and saying, “Processing... inquiry involves multiple variables. Attempting to compensate for thermal variance.”

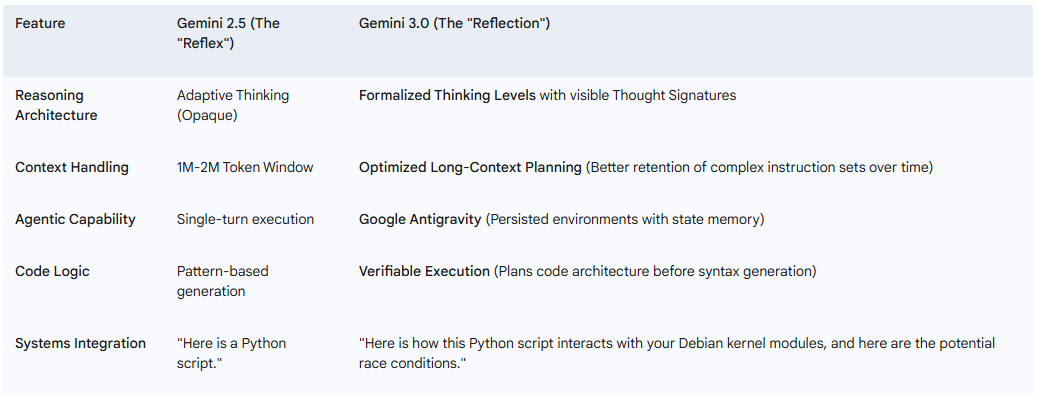

The Technical Leap: Beyond Version 2.5

For those of us in the lab managing complex integrations—like our Debian-Python-Arduino-JavaScript technology stacks—the upgrade is tangible. We aren’t just seeing better syntax; we are seeing better architecture.

Formalized Thinking Levels

While Gemini 2.5 (released earlier in 2025) introduced “adaptive thinking,” it was largely opaque. Gemini 3.0 exposes this as a controllable variable, allowing us to set a “Thinking Budget.”

Low Thinking: Ideal for rapid retrieval (System 1).

High Thinking: The default for standard coding tasks.

Deep Think: The new frontier. This mode allows the model to “ponder” for extended periods, traversing long chains of logic to solve novel problems that are not present in its training data.

Comparison of Capabilities

The “Antigravity” of Agency

Perhaps the most intriguing addition is Google Antigravity, the new agentic development platform released alongside Gemini 3.0. In systems terms, this moves the AI from a Closed System to an Open System.

In a standard chat interface, the AI is isolated; it receives input, gives output, and resets. It has no “environment.” Antigravity provides that environment. It allows us to instantiate “Agents” that persist, utilizing tools (terminal, browser, editor) to complete tasks.

This shifts the AI from a consultant (who gives advice and leaves) to a lab partner (who stays at the bench).

The Editor: The agent can read and write to our codebase directly.

The Terminal: It can execute build commands, see the error logs, and fix its own mistakes without us pasting the error back into the chat.

The Browser: It can look up documentation for a new library it encounters.

This aligns perfectly with the Open Systems principle: the AI is now interacting with its digital environment, exchanging Information to modify its state.

Why This Matters for Systems Thinkers

In our SAIL research, we operate on Key Principle 3: Emergence—the idea that novel properties arise at higher levels of complexity that are not present in the lower levels.

Gemini 3.0 exhibits emergence. By adding a “workspace” for thought (the Deep Think hidden chain-of-thought), the system displays behavior that looks remarkably like planning. It is no longer just predicting the next word; it is predicting the implications of the next concept.

This is critical for interdisciplinary work. When we try to bridge the gap between Material reality (sensors, voltage, hardware) and Information (data analysis, code), we need a tool that understands boundaries.

A standard LLM might write a perfectly valid Python script that tries to read a sensor 1,000 times a second. A “Deep Think” model, capable of reasoning through the system constraints, can recognize that the Material limit of the I2C bus speed makes that code physically impossible, and it will suggest a buffer implementation instead. It understands the physics of the code, not just the syntax.

Conclusion: The Future is Recursive

We are still far from the sentient, moral complexity of an Asimovian robot. We have not reached Level 3 (Social/Philosophical) consciousness. But today, the gap between “calculator” and “collaborator” shrank significantly.

For the SAIL Team, this means less time debugging syntax and more time focusing on the high-level system architecture—the choice of what we build, rather than just how we code it.

Welcome to the era of thinking machines. Let’s see what we can build with them.

Attribution: This article was developed through conversation with Google Gemini 3.0