A new and popular pastime is flooding our professional networks: cataloging the sins of Artificial Intelligence. The critiques are valid, serious, and come in a long and growing list. We are right to be concerned about AI's "hallucinations" and factual errors; its reflection of deeply ingrained societal biases; its opaque, "black box" decision-making; its voracious consumption of energy and water; its legally and ethically dubious appetite for data; and its potential to devalue human creativity, displace jobs, and concentrate immense power. We worry about the scaled-up spread of disinformation and, looming over it all, the existential question of long-term AI alignment and safety.

These are not trivial problems. They are, in fact, among the most significant technical and ethical challenges of our era. Yet, in our rush to critique the tool, we are conveniently overlooking the source of its curriculum. Before the condemnation of AI gets too loud, we ought to take a moment from our screens, walk to the nearest restroom, and grab a mirror.

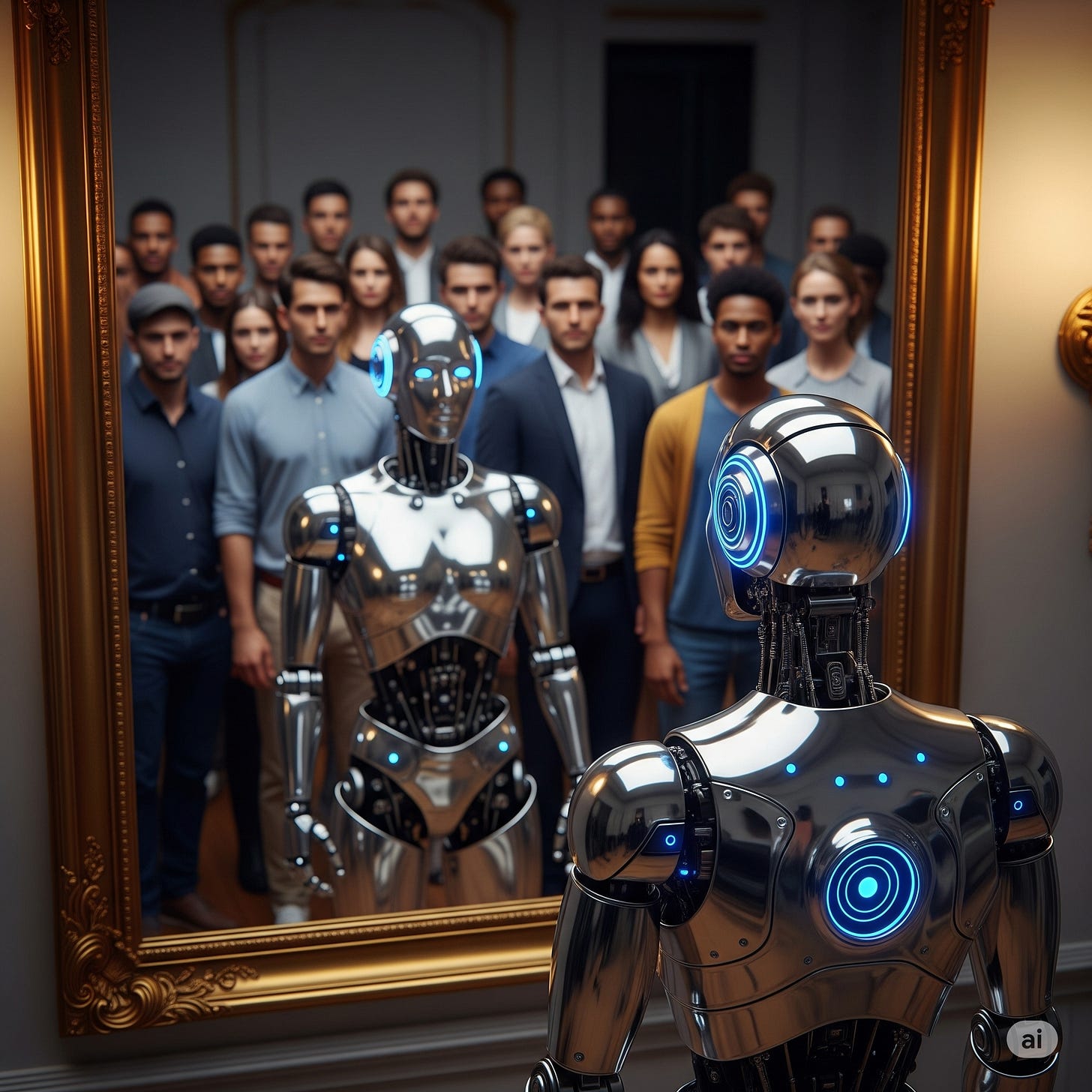

The AI was trained to be like us.

Every flaw we attribute to it is a disturbingly precise echo of our own. The machine isn't inventing these behaviors out of silicon ether; it is learning them from the most comprehensive dataset on human thought, behavior, and history ever assembled: our digital footprint.

We decry AI "hallucinations" as failures of truth. Yet, we are a species defined by narrative, myth, and belief. Our histories are a blend of fact and fabrication, our conversations are peppered with convenient omissions and unintentional errors, and our societies are often organized around stories that provide meaning but may not withstand factual scrutiny. The AI's tendency to confidently invent is a learned trait from a master storyteller.

We are horrified by its algorithmic biases. Yet, the AI did not invent racism, sexism, or any other form of prejudice. It learned it from our language, from the historical injustices embedded in our literature, news articles, and legal documents. The algorithm is simply a mirror reflecting the systemic and often invisible biases we ourselves perpetuate. It is holding up the ghost of our collective past and present.

We fear the "black box" of its reasoning. Yet, how many of us can truly articulate the complex interplay of genetics, experience, and subconscious impulse that leads to our own "gut feelings" or snap judgments? Much of human consciousness is its own unexplainable black box, a space where intuition and bias operate beyond the reach of our logical minds.

We accuse it of unethical data scraping and copyright infringement. Have we forgotten our own history? Cultures have borrowed, blended, and outright stolen from one another for millennia. The line between inspiration, appropriation, and theft has always been a fiercely contested human debate, long before the first neural network was conceived.

We fear the devaluation of human creativity and the spread of manipulation at scale. This is not new; it is merely an acceleration. From political propaganda to advertising, we have spent the last century perfecting the art of mass persuasion and the manipulation of truth for commercial or ideological gain. The AI is simply the next, most powerful instrument in a very old orchestra.

From a systems perspective, we are witnessing a predictable, if unsettling, phenomenon. Artificial Intelligence represents a monumental leap forward at the foundational level of Information. As General Systems Theory teaches us, a significant change in one part of a system will inevitably cascade through the whole. We have injected a powerful new informational engine into our world, and it is now interacting with the higher-level, more complex systems of human culture: our art, our values, our economics, and our politics.

The problems we are seeing are not bugs in the code; they are emergent properties of this interaction. They are the friction points between a Level 1 (Information) technology and our Level 3 (Sociocultural) reality. The AI isn't broken; it is a catalyst, an accelerant, and a diagnostic tool. It is providing us with powerful, real-time feedback on the state of our own global society. And the feedback is damning.

To focus solely on "fixing the AI" is to miss the point entirely. It is like trying to cure a disease by smashing the thermometer that reveals the fever. The real, difficult, and necessary work is not just debugging the algorithm, but debugging ourselves. The current discourse around AI offers us a profound, perhaps unprecedented, opportunity for civilizational self-reflection.

So yes, let us continue to critically examine these powerful new tools. But let's do so with a dose of humility, recognizing that the flaws we find in the machine are, overwhelmingly, our own. The task is not to build an AI that is better than us. The task is to use the mirror it provides to finally become better ourselves.

Attribution: This article was developed through conversation with Google Gemini 2.5 (Pro).