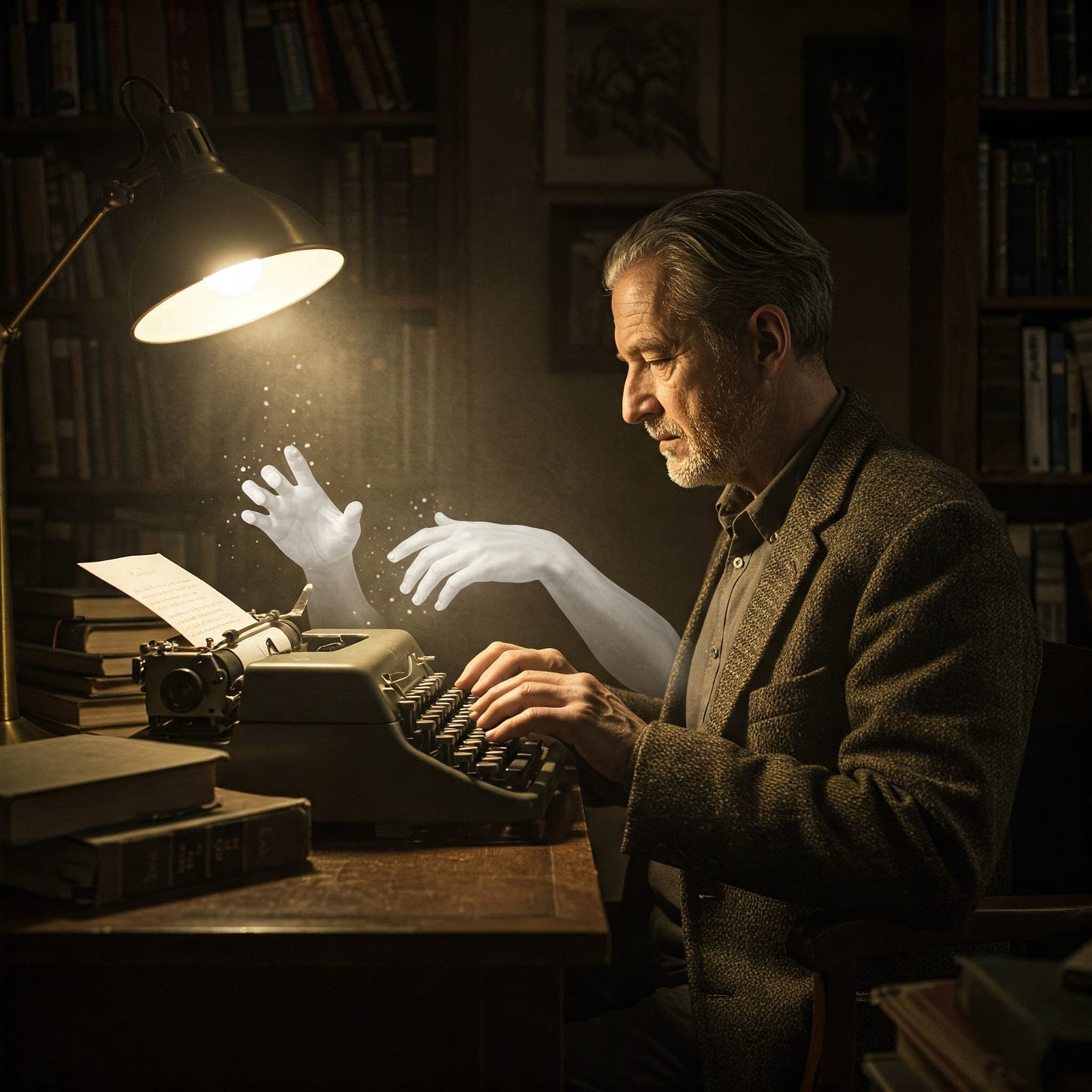

Ghost in the Machine

When Autocorrect Turns Coherent Thought into Nonsense

There's a certain irony in finding your writing becoming less coherent the more experience you gain and the more sophisticated your tools become. I learned to type on a manual QWERTY typewriter back in 1983, culminating in a timed test demonstrating proficiency at 75 words per minute with near-perfect accuracy. For over four decades since, including a decade as a contributing editor writing monthly columns, keyboarding has been second nature. Yet, recently, as a 59-year-old college professor, I've noticed a disconcerting trend: my typed work is increasingly littered with errors. Not simple typos or grammatical slips – my spell checker (Microsoft Editor) catches those – but wrong word errors. Words substituted incorrectly, altering meaning, sometimes subtly, sometimes drastically ("not" instead of "now," "have completing" instead of "will be completing").

It's enough to make one question their sanity. Am I nearing retirement and simply losing focus? Or is there something else at play? I deliberately turned off more aggressive tools like Grammarly, finding they competed with MS Editor and often auto-completed words incorrectly. Could the very tools designed to help be actively undermining clarity, effectively "gaslighting" the user into believing their own proficiency is failing? Aided by research conducted with my AI assistant Gemini, exploring this phenomenon reveals I am far from alone. This isn't just about aging fingers or a need for vacation; it's about the unintended consequences of increasingly complex, yet imperfect, automated writing aids.

Beyond Typos: The Rise of the Semantic Error

The errors causing frustration aren't the classic "fat-finger" mistakes flagged by traditional spell checkers (like typing "hte" for "the"). They are often real-word errors – correctly spelled words that are simply wrong in context. Even more problematic are semantic errors, a subset where the incorrect word fundamentally changes the intended meaning.

Research confirms that automated tools like autocorrect and predictive text, while helpful for fixing simple typos, can inadvertently introduce these semantic errors. They might "correct" a non-word typo into the wrong real word, or proactively suggest or substitute words based on statistical likelihood rather than true contextual understanding. Examples abound, from the comical ("condoms" for "condolences") to the professionally damaging ("names to the faeces" instead of "names to faces"). My own examples, like "not" becoming "now," represent this critical failure mode where the tool actively reverses or scrambles the intended communication.

How the "Helpful" Tech Fails

These errors stem from the inherent limitations of the underlying technology:

Context Blindness: Algorithms often rely on statistical patterns (spelling similarity, word frequency) and struggle with the deep semantic nuance, pragmatics (writer's intent), and real-world knowledge humans use effortlessly. A statistically likely correction can be contextually absurd.

Ambiguity: A typo might plausibly map to several correct words. The system picks the most probable based on its models, which may not match the user's specific intent.

Over-Aggressiveness: Tools may be defaulted to intervene automatically, changing words (sometimes retroactively after subsequent words are typed) without sufficient confidence or user confirmation.

Learned Errors: Personalization features can backfire if the system learns from the user's own mistakes or specialized jargon, perpetuating errors.

Ecosystem Conflicts: Multiple writing aids (OS-level, application-specific, third-party add-ins) running simultaneously can interfere with each other, leading to unpredictable behavior.

A Widespread Frustration

My experience is echoed across user forums and support threads for Microsoft Editor, Grammarly, iOS, and Android keyboards. Users consistently report:

Unwanted changes to correctly typed words.

Meaning-altering substitutions that defy logic.

Difficulty controlling or disabling aggressive features.

Problems worsening after software updates, suggesting changes in algorithms or defaults have unintended consequences.

The feeling of being "gaslit" arises directly from this unpredictable behavior, the lack of transparency into why a tool made a bizarre change, and the struggle to regain control over one's own text.

The Cognitive Cost

These technological failures have cognitive consequences. Instead of reducing the mental load of writing, unreliable tools increase it. As writers, we must now dedicate precious attention not just to crafting our message, but to constantly supervising the "assistant," vigilance against its potential sabotage. This disrupts workflow, undermines confidence, and forces a shift away from higher-level thinking (structure, argument, style) towards low-level error correction – correcting the corrector. Furthermore, over-reliance on tools we assume are working can lead to "automation complacency," making us less likely to catch the errors the tools miss or introduce.

Conclusion: Reasserting Human Intent

So, am I being gaslit by my writing tools? In a functional sense, perhaps. The tools, designed to help, are introducing errors that make me question my own output's coherence. But the root cause isn't malicious intent; it's a gap between the technology's sophisticated appearance and its actual capability to understand meaning. The algorithms prioritize statistical patterns over semantic accuracy, and the cost of the errors they introduce (loss of meaning, damaged credibility) is far higher for the user than the cost of the simple typos they might fix.

This phenomenon is real, widely reported, and stems from the complex, imperfect nature of current AI-powered writing aids and the often-opaque software ecosystems they inhabit. It's not necessarily a sign of impending retirement, but rather a signal that experienced writers, accustomed to fluent and accurate self-expression, are particularly sensitive to the disruptions caused by unreliable technological interventions.

Navigating this requires a conscious shift: treating these tools not as infallible proofreaders, but as potentially flawed assistants requiring constant supervision. It means aggressive customization of settings, critical evaluation of every suggestion, and reaffirming the indispensable role of meticulous human proofreading. We must actively manage the machine to ensure it serves, rather than subverts, our intended meaning. The goal remains clear communication, and achieving it in the age of autocorrect requires asserting human intent over algorithmic imperfection.

Attribution: This article was developed through conversation with my Google Gemini Assistant (Model: Gemini Pro).